Virtual Reality is the use of computer technology to create a simulated environment. Unlike traditional user interfaces (as well as AR), VR places the user inside an experience. They are immersed and able to interact with a 3D space . The main purpose of VR is to “create and enhance an imaginary reality for gaming, entertainment and play” (augment – augment.com). What is the main purpose of AR, however?. Mainly entertainment, like VR. But it does have some official applications in the military, for example it uses an augmented reality to assist men and women making repairs out on the field. As well as this, museums have recently augmented a live view of displays with facts and figures, to add to the experience, instead of reading a plaque or listening to some audio which many find boring. Read more about this recent development at inavateonthenet.net.

They are very similar in a lot of ways. An article published on augment.com tells us that they both “leverage some of the same types of technology, and they each exist to serve the user with an enhanced or enriched experience”. What this essentially means is that they both strive to reach the same goal with their comparable technology. As well as this, both AR and VR have most recently been used as a media entertainment source. Examples include, VR games and experiences and AR mobile applications. This leads developers and companies investing and creating new improvements, adaptations and equipment to release more creative products and apps that support these technologies for the “die-hard” fans. On the subject of innovative and new products, AR and VR could be a groundbreaking new technology in the world of Medicine. They could make remote surgeries a real possibility, as well as the fact they have already been used to treat and help the recovery of PTSD (Post Traumatic Stress Disorder). They do have their differences, though.

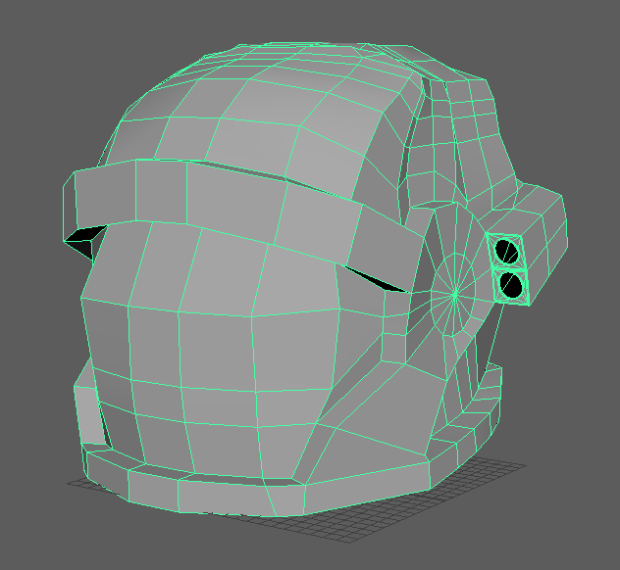

The delivery method of these technologies are both different.With assistance from an article by wareable.com, I learned that VR is usually delivered to the user through a head-mounted device or handheld, sometimes both. Oculus Touch being a great example here. On the other hand, Augmented Reality is a lot more accessible to the average consumer. Being used more and more in mobile devices, handhelds and portable equipment. These devices change how the real world looks, combined with digital images and graphic intersect, they create their own small world. Depending on whether you want to be “completely isolated from the real world while immersed in a world that is completely fabricated” (techtimes.com) Or in touch with the real world while interacting with virtual objects through a phone, you will have to decide which one is for you.

In conclusion, VR and AR are completely different things. One being more accessible to the average user, and the other being for the more hardcore fan. Both are incredibly unique and fun experiences and have their own uses outside of entertainment, like Medicine. You cannot really compare the two, and have a clear winner as they are both built for different purposes, but, I personally, prefer VR. This is because it offers such a unique and eye-opening experience and can have many more uses in the real world over AR.